The Problem

The problem we are attempting to solve is the challenge of estimating a person's age from their face. Rather than following the classic approach of treating this as a regression problem, we will approach this problem using convolutional neural networks.Setup

Dataset

We used the IMDB-WIKI data set, which contains 500k+ images with age and gender labels scraped from the IMDB website and and Wikipedia. It is the largest labeled age dataset available online. The data set is unblanced, containing a concentrated distribution of people ages 20 to 40. We found this data set attached to the paper "DEX: Deep EXpectation of apparent age from a single image" (Rothe, Timofte, & Van Gool). This paper was also the starting point of inspiration for our network designs.

Pre-Processing

All pictures in this dataset are raw pictures scraped from websites and required pre-processing. To pre-process the images, we used the dlib library to align the faces. This library returns the four coordinates of the box contianing the face. We chose to keep a 40% margin around the cropped faces because, according to the paper "DEX: Deep EXpectation of apparent age from a single image" (Rothe, Timofte, & Van Gool), the additional margin preserves more context information and improves the result. After that, we resized the faces to the same size (224x224) so they could be fed into our convolutional neural network. Due to hardware limitations, we did not have the time or processing power to run our network on the entire data set. Instead, we only used the WIKI dataset, which contains 62,359 pictures.Hardware

We implemented our model using the Keras library with a TensorFlow backend. We've run our code on two GPUs - the Bridges supercomputer at the Pittsburgh Supercomputing Center (PSC) and an Amazon EC2 g2.2x large GPU using community image vict0sch, with 10GB memory.

Model

I: VGG16

Motivation: We first began by trying to implement the VGG16 architecture, an architecture commonly used for image classification problems. As shown in Figure 1, the VGG16 architecture contains 12 convolutional layers with ReLu activation functions. These are interspersed with four max pooling layers, followed by several fully connected layers.

Figure 1: VGG16 Architecture

Implementation: We found a Keras implementation of the VGG16 architecture designed to be used with ImageNet. The last layer of the model originally contained a softmax function which outputted a length-1000 array, each value representing the probability that the input belonged to each of the 1000 object classes. We modified the code to output a length-100 array, each index of which corresponds to one age. We began with weights trained on ImageNet, then modified these weights as we trained on our own data set.

Result: The model did not train very well. Because the model was so large, training took a long time, so to train in a reasonable timeframe we were forced to only use around 2000 images from our dataset. Even so, after several epochs, the training accuracy still remained very low and was not increasing.

II. Fine-Tuning VGG16

Motivation: Properly training the full VGG16 architecture on our extensive data set would take too long on our hardware. Fine-tuning a pretrained model on a new data set is a way to take advantage of the training done by others.

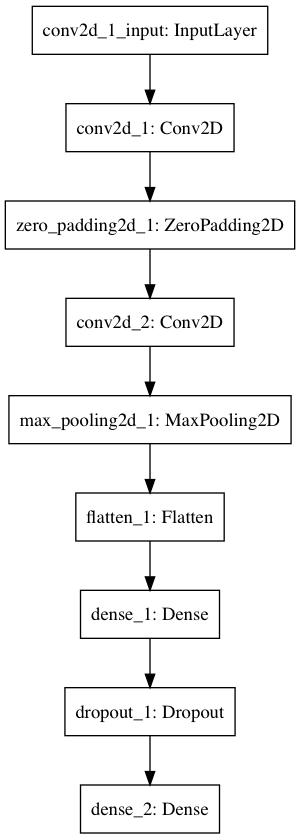

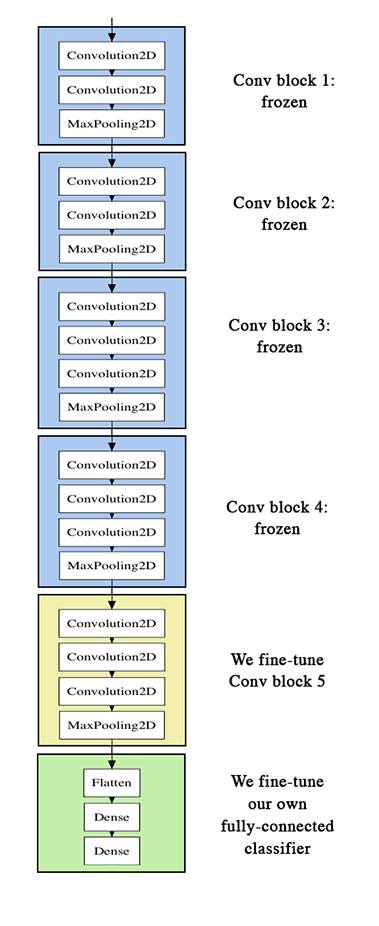

Implementation: Inspired by an implementation which fine-tuned a VGG16 model trained on ImageNet to differentiate dogs from cats, we created a top-level model containing two convolutional layers (the full model is shown in Figure 2). We planned to first train our top-level model on our data set, then to refine the weights of the final convolutional block of the VGG16 model, and finally, to train the network all together (VGG16 model + our top-level model). We were unable to find a Keras model pretrained for any age estimation problem, so we instead tried to use a model pre-trained on ImageNet (which classifies objects, including people, into one of 1000 categories). This process is shown in Fig 3.

Result: We never got the chance to try the entire model together. The fine-tuning approach involves first training the top-level model fairly well on the data set while the pre-trained model weights are frozen, then fine-tuning the pre-trained weights to improve accuracy. However, we were unable to accomplish the first step. Our accuracy began extremely low. The training accuracy improved marginally, but the validation accuracy showed little or no increase.

Figure 2: Top-level model we implemented on top of VGG16.

Figure 3: This diagram shows our planned fine-tuning strategy. The blue blocks (part of the VGG16 architecture) would remain frozen while we trained our top-level model.

III. New Convolutional Model

Motivation: Realizing that perhaps the VGG16 architecture was too large for our purposes, we implemented the convolutional model described in "Age and Gender Classification using Convolutional Neural Networks" (Levi & Tassner), which achieved about 50% accuracy at classifying people into age categories, each spanning approximately 6 years.

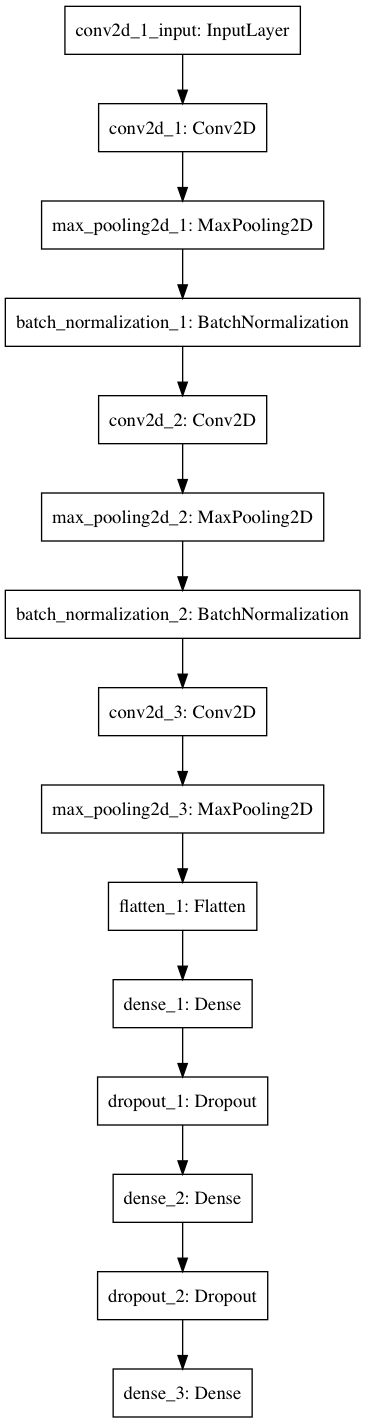

Implementation: The model involved three blocks of layers, each of which contained a convolutional layer with a ReLu activation function, a pooling layer, and a normalization layer. After these blocks the data was flattened, and the model ended with three fully connected layers, each separated from the others by a dropout layer. In the final layer, a softmax function was used to obtain a probability distribution giving the probability of the person being in each of our 100 age classes. You can see the full model in Figure 4.

Result: As we trained our model, the training accuracy increased steadily, but the validation accuracy and loss showed no movement - evidence, we believe, of overfitting. To deal with this issue, we tried increasing the size of our dataset, but ran into memory/space errors. We also tried adding extra dropout layers after each convolutional block, but these just prevented the network from training at all. We also tried adjusting our batch size and learning rate, but these had no noticeable affect on training.

Figure 4: New Convolutional Model

IV. Larger Age Classes

Motivation: We realized that trying to classify faces into 100 age classes is difficult, as the difference between the features of people one year apart is negligible. We decided to instead use 5 larger age classes which (hopefully) would have more distinctive characteristics. This was also the approach used by Levi & Tassner in their paper "Age and Gender Classification using Convolutional Neural Networks."

Implementation: We used 5 age classes, each of which covered a 20-year spread (0-20, 21-40, 41-60, 61-80, 81-100).

Result: The initial accuracy jumped up dramatically (as expected, because there were fewer classes). Like before, during training, the accuracy increased and the loss decreased for our training set. For the first 7-10 epochs, the loss and accuracy of the validation set fell/rose similarly to the training data, but quickly the validation loss and accuracy plateaued.

V. Balanced Data Set

Motivation: Our data set is heavily skewed toward people age 20-40, which means that most of our samples belong in just one class.

Implementation: We chose equal numbers of samples from each age class. In total, we used 2000 images for training.

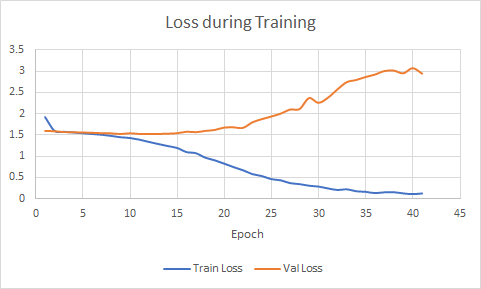

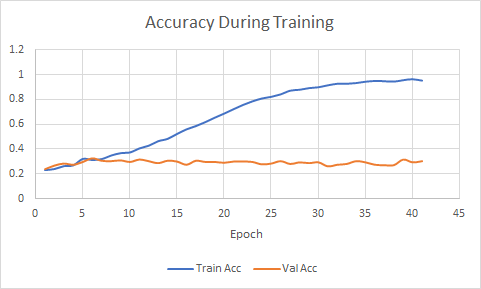

Result: There were no noticeable improvements. The result of one round of training is displayed in Figure 5.

Figure 5: Loss and training accuracy over 41 epochs.

Conclusion

Our network was relatively unsuccessful at estimating the age of individuals in the data set. We believe these are contributing factors:

- Overfitting: Even in our most functional models, where the training set increased in accuracy until it plateaued near 100%, the validation set quickly stopped increasing in accuracy. Although we tried to avoid this with methods such as incrasing the number of dropout layers and using more images, overfitting still remains a large problem.

- Difficult classification problem: Age estimation is a difficult problem, because people's apparent age may differ from their actual age. Our data set made the problem even more difficult. The images featured faces at a variety of angles, some with makeup or hats, making it more difficult to identify trends.

- Data set size: Out data set contained over 500k images, but we were only able to use a small subset of them due to hardware restrictions. Training on the entire data set could help us improve accuracy.

- The original paper on which we based much of our approach fine-tuned their model based on pretrained weights. The model described in the papwer was the winner of a previous age estimation challenge. However, this set of weights is only available for the Caffe framework. When we realized our methods were not working, it was too late for us to shift from Keras to Caffe.

Even so, we are pleased that the results of our network did show some improvement after the attempts described above.

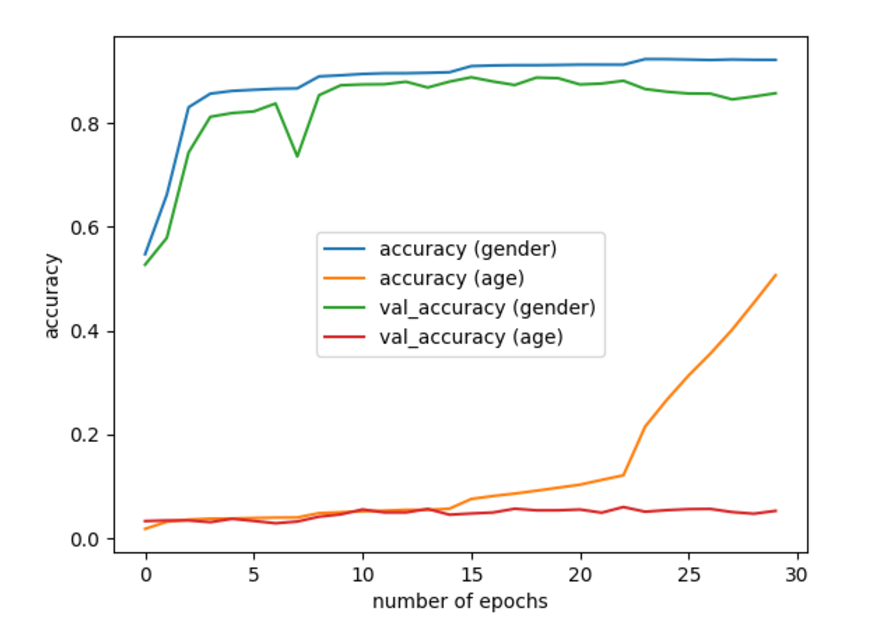

Figure 6: Result from other implementation.

The progress we've made has shown that our network does learn a little, though the improvement is not very significant. During the first seven epochs, validation loss decreases and validation accuracy increases from 20% to 30%, which approximately matches how fast the training accuracy increases. We see this as a sign that our network does learn at least a little.

Future Work

For future work, we would like to improve and extend the current network in following ways:

- To improve our convolutional net, a better dataset (with all faces facing forward, for instance) could give significantly improved results. Also, we received the advice during our presentation that the 40% margin might be one source of error. Though this is the strategy used in one paper we read to improve accuracy, we are curious whether extra context information contributes to overfitting or better accuracy.

- We would like to use our network model to try to guess a person's gender from their face. Hopefully, this will be a far easier classification problem since there are only two categories. We began to implement this, but were unable to obtain useful results yet, largely due to the long time needed to train large data sets.

- Age and gender might be two correlated labels - i.e. the gender of an individual might affect their percieved age (according to the neural network). If that is the case, the problem is not only a multi-class but also a multi-label problem. We want to try out the approach of multi-label classification on this problem.

- Our original initial plan included trying out age estimation using a self-organizing map. Due to time issues, we didn't get the chance to do it. This topic is something we would like to explore in the future.

- Use our model to solve more real world problems. For example, Nexar has a coming data challenge which involves recognzing signal lights to improve self driving car technology. These real world problems which are solvable by convolutional networks similar to ours interest us a lot.